Hi, I’m Chanyoung Chung, an Integrated M.S. & Ph.D. Student of School of Computing at KAIST. I’m a member of the Big Data Intelligence lab led by Professor Joyce Jiyoung Whang.

My current research interests are graph machine learning, graph neural networks, and knowledge graphs.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Korea Advanced Institute of Science and Technology (KAIST)Integrated M.S. & Ph.D. Student in School of ComputingMar. 2021 - present

Korea Advanced Institute of Science and Technology (KAIST)Integrated M.S. & Ph.D. Student in School of ComputingMar. 2021 - present -

B.S. in School of Computing & Department of Mathematical Sciences (Double Major)Mar. 2017 - Feb. 2021

Selected Publications (view all )

Representation Learning on Hyper-Relational and Numeric Knowledge Graphs with Transformers

Chanyoung Chung*, Jaejun Lee*, Joyce Jiyoung Whang (* equal contribution)

ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD) 2023

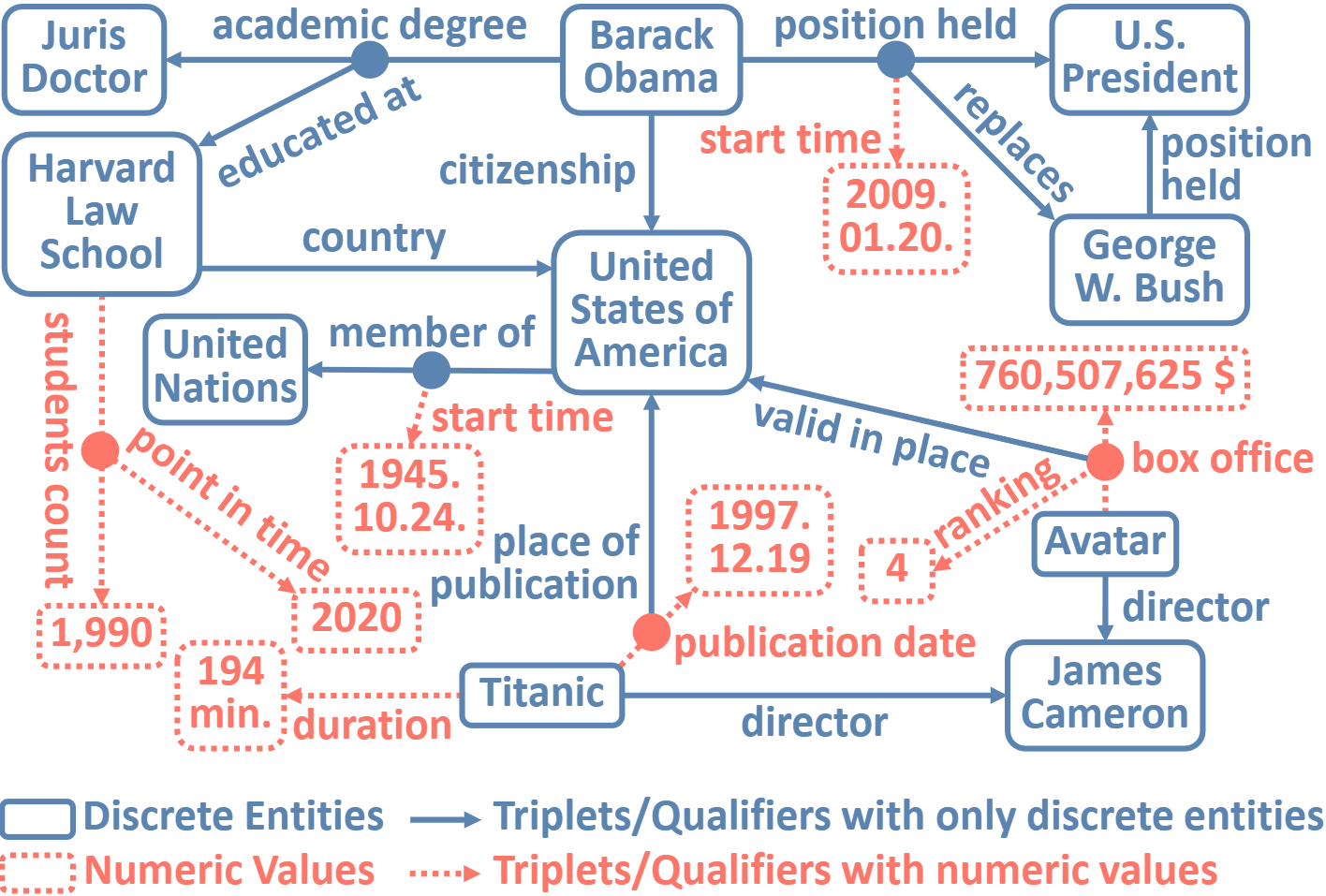

In a hyper-relational knowledge graph, a triplet can be associated with a set of qualifiers, where a qualifier is composed of a relation and an entity, providing auxiliary information for the triplet. While existing hyper-relational knowledge graph embedding methods assume that the entities are discrete objects, some information should be represented using numeric values, e.g., (J.R.R., was born in, 1892). Also, a triplet (J.R.R., educated at, Oxford Univ.) can be associated with a qualifier such as (start time, 1911). In this paper, we propose a unified framework named HyNT that learns representations of a hyper-relational knowledge graph containing numeric literals in either triplets or qualifiers. We define a context transformer and a prediction transformer to learn the representations based not only on the correlations between a triplet and its qualifiers but also on the numeric information. By learning compact representations of triplets and qualifiers and feeding them into the transformers, we reduce the computation cost of using transformers. Using HyNT, we can predict missing numeric values in addition to missing entities or relations in a hyper-relational knowledge graph. Experimental results show that HyNT significantly outperforms state-of-the-art methods on real-world datasets.

Representation Learning on Hyper-Relational and Numeric Knowledge Graphs with Transformers

Chanyoung Chung*, Jaejun Lee*, Joyce Jiyoung Whang (* equal contribution)

ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD) 2023

In a hyper-relational knowledge graph, a triplet can be associated with a set of qualifiers, where a qualifier is composed of a relation and an entity, providing auxiliary information for the triplet. While existing hyper-relational knowledge graph embedding methods assume that the entities are discrete objects, some information should be represented using numeric values, e.g., (J.R.R., was born in, 1892). Also, a triplet (J.R.R., educated at, Oxford Univ.) can be associated with a qualifier such as (start time, 1911). In this paper, we propose a unified framework named HyNT that learns representations of a hyper-relational knowledge graph containing numeric literals in either triplets or qualifiers. We define a context transformer and a prediction transformer to learn the representations based not only on the correlations between a triplet and its qualifiers but also on the numeric information. By learning compact representations of triplets and qualifiers and feeding them into the transformers, we reduce the computation cost of using transformers. Using HyNT, we can predict missing numeric values in addition to missing entities or relations in a hyper-relational knowledge graph. Experimental results show that HyNT significantly outperforms state-of-the-art methods on real-world datasets.

InGram: Inductive Knowledge Graph Embedding via Relation Graphs

Jaejun Lee, Chanyoung Chung, Joyce Jiyoung Whang

International Conference on Machine Learning (ICML) 2023

Inductive knowledge graph completion has been considered as the task of predicting missing triplets between new entities that are not observed during training. While most inductive knowledge graph completion methods assume that all entities can be new, they do not allow new relations to appear at inference time. This restriction prohibits the existing methods from appropriately handling real-world knowledge graphs where new entities accompany new relations. In this paper, we propose an INductive knowledge GRAph eMbedding method, InGram, that can generate embeddings of new relations as well as new entities at inference time. Given a knowledge graph, we define a relation graph as a weighted graph consisting of relations and the affinity weights between them. Based on the relation graph and the original knowledge graph, InGram learns how to aggregate neighboring embeddings to generate relation and entity embeddings using an attention mechanism. Experimental results show that InGram outperforms 14 different state-of-the-art methods on varied inductive learning scenarios.

InGram: Inductive Knowledge Graph Embedding via Relation Graphs

Jaejun Lee, Chanyoung Chung, Joyce Jiyoung Whang

International Conference on Machine Learning (ICML) 2023

Inductive knowledge graph completion has been considered as the task of predicting missing triplets between new entities that are not observed during training. While most inductive knowledge graph completion methods assume that all entities can be new, they do not allow new relations to appear at inference time. This restriction prohibits the existing methods from appropriately handling real-world knowledge graphs where new entities accompany new relations. In this paper, we propose an INductive knowledge GRAph eMbedding method, InGram, that can generate embeddings of new relations as well as new entities at inference time. Given a knowledge graph, we define a relation graph as a weighted graph consisting of relations and the affinity weights between them. Based on the relation graph and the original knowledge graph, InGram learns how to aggregate neighboring embeddings to generate relation and entity embeddings using an attention mechanism. Experimental results show that InGram outperforms 14 different state-of-the-art methods on varied inductive learning scenarios.